Over Christmas I typed up my Bass-Serre / Grzegorczyk stuff in quite a lot of detail and fairly rigorously. It’s far too much to put up here as blog posts, so here’s a link to the PDF.

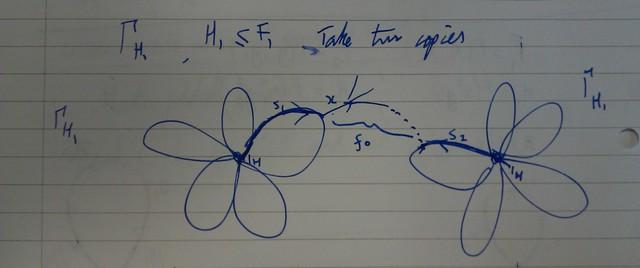

The big theorem I prove says that the fundamental group of a graph of -computable groups is -computable. But are there cases when the fgoagog stays at , and can we pin down what conditions cause it to move up to ? I’ve found a couple of examples which begin to answer those questions.

Corollary 1 says that if the edge groups are finitely generated then you don’t go up a level, while Corollary 2 gives an example of a graph with infinitely generated edge groups that does go up a level.

Little Facts

- The th prime .

- .

- The Gödel encoding of a word of length on an alphabet is , an function.

Theorem 6.7 (from the paper)

Suppose we have , where is finite. Suppose all the are finitely generated -computable groups, . Assume all the identified subgroups are -decidable, and all the isomorphisms are -computable. Then is -computable.

Corollary 1

Same assumptions as the Theorem 6.7, but also assume that the edge groups are finitely generated. So each edge homomorphism sends a finitely generated subgroup to another finitely generated subgroup . Suppose everything is -computable.

Then is -computable.

Proof

In the algorithm from Theorem 6.7, the potential for unbounded recursion comes from applying the edge homomorphisms repeatedly. We will show that the result of applying the edge homomorphisms repeatedly is bounded by an function.

Let be a word on of length . An upper bound on the number of syllables in is , so let’s say there are applications of edge homomorphisms.

A homomorphism rewrites generators as words . As is finitely generated, there is a word of maximum, finite length . So a word of length is rewritten to a word of length at most .

Because the graph is finite, we can find a greatest . So after reducing all the syllables (assuming that can be done for the input word), the resulting word in has length at most .

Hence the index of the resulting word is bounded by

an function, by Fact 2. So recursion on the edge homomorphisms is bounded by an function, and so is -computable.

Corollary 2

We will construct a graph of -computable groups whose fundamental group is -computable.

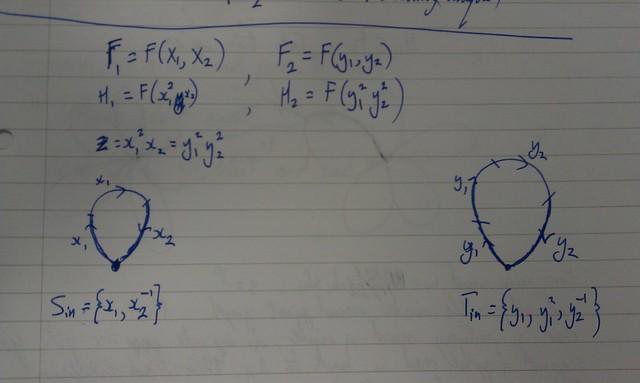

Let be two copies of the free group on two generators, so and . The graph has a single edge from to , so .

Let , . So the edge groups are infinitely generated.

Define

Then is -computable.

Proof

First of all, the homomorphisms are -computable because words of the form can be recognised by an function. Hence all the ingredients of the graph of groups are -computable.

A word of the form

will be reduced to a word on whose length is proportional to , where is the number of syllables. As depends on the input, this length can only be computed by unbounded recursion on an function, so is only -computable.

Therefore, is -computable.